认识Kubebuilder

前言

没有人会喜欢黑盒,在使用一个工具之前,我习惯于尽可能多地去了解它,不然用起来会觉得不踏实。Controller的工作流程已经很熟悉了,理解kubebuilder的源码应该也比较容易。因此,大概阅读了一下了kubebuilder的源码,本篇圈出其中几个重点,用以帮助理解和认识kubebuilder。

概念名词

下面几个概念名词非常的重要,文中会多次提及。

Own Resource

CRD一般设计用作管理k8s内置的各类资源组合,用以实现自定义的部署和运行逻辑,是一种上层封装,因此被封装的下层build in Resouce实例,就称之为 Own Resource.

Owner Resource

与上面的 Own Resource对应的,CRD资源实例作为上层管理单位,称之为 Owner Resource.

Kind & Resource

两者在绝大部分情况下说是类型一一对应的关系,例如所有的Pod resources所属的Kind都是Pod Kind,可以简单理解为Kind是resource的种类标识。

GVK & GVR

GVK = Group + Version + Kind组合而来的,资源种类描述术语,例如 deployment kind的GVK是 extensions/v1beata1/deployments

GVR = Group + Version + Resource组合而来的,资源实例描述术语,例如某个deployment的name是deploy-sample,那么它的GVR则是extensions/v1beata1/deploy-sample

Scheme

每种资源的都需要有对应的Scheme,Scheme结构体包含gvkToType和typeToGVK的字段映射关系,APIServer 根据Scheme来进行资源的序列化和反序列化。

Scheme struct如下:

1 | type Scheme struct { |

组件

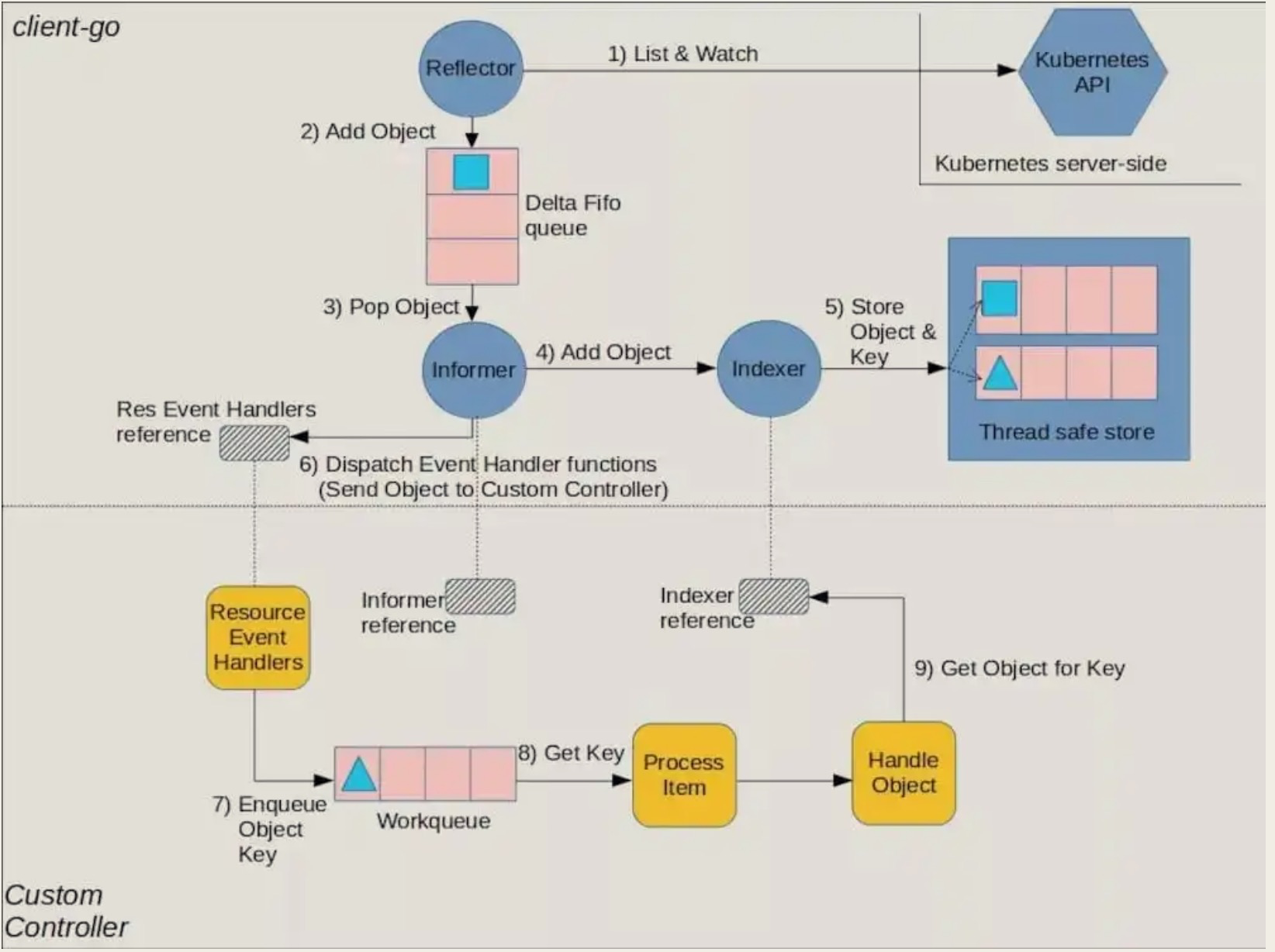

controller使用client-go包里的informer模式工作,向APIServer watch GVK下对应的GVR,并充分利用cache、index、queue,可参考这张图片再回顾一下这个工作流程:

与此对应的,kubebuilder大致有下面几种主要组件:

Manager

Kubebuilder 的最外层管理组件,负责初始化controller、cache、client。

Cache

Kubebuilder 的内部组件,负责生成SharedInformer,watch关注的GVK下的GVR的变化(增删改),以触发 Controller 的 Reconcile 逻辑。

Clients

controller工作中需要对对资源进行CURD,CURD操作封装到Client中来进行,其中的写操作(增删改)直接访问 APIServer,其中的读操作(查)对接的是本地的 Cache。

初始化

首先使用kubebuilder初始化一个CRD项目,以便展开进入kubebuilder的内部。

在初始化之前,首先想好CRD资源的名称,名称不要与现有的资源名称冲突,api groupVersion,建议使用自定义的api groupVersion与内置的区别开。

查看现有的所有resource:

1 | kubectl api-resources -o wide |

查看现有的api groupVersion:

1 | kubectl api-versions |

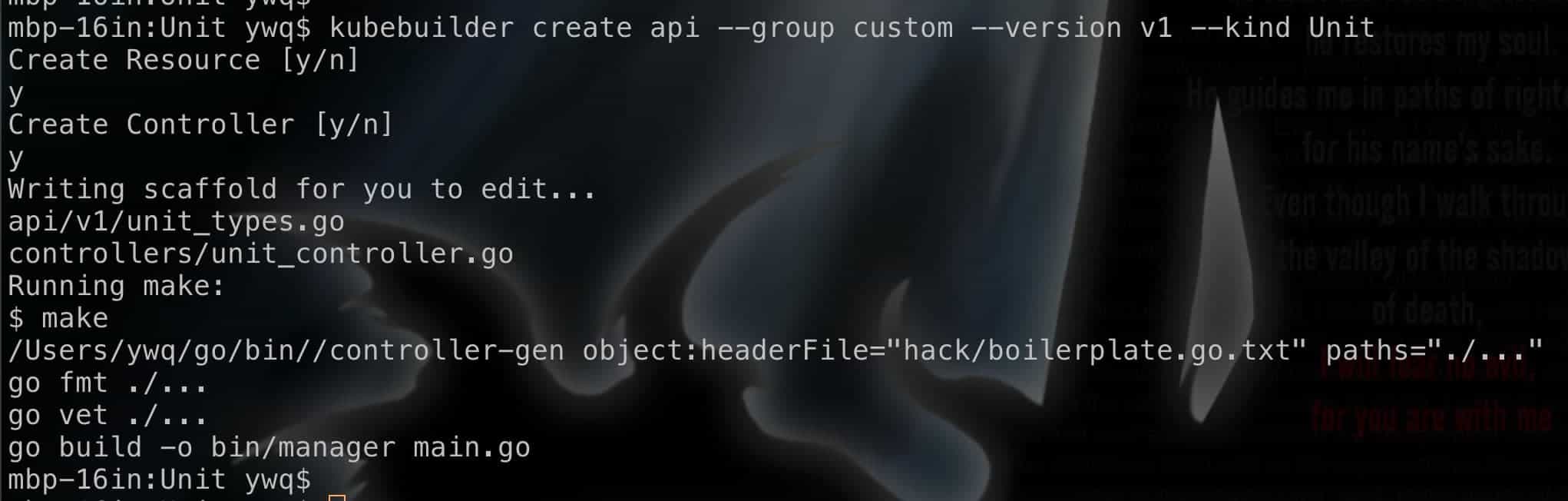

我的CRD 名称定为: Unit, api groupVersion定为 custom/v1

init

1 | # 自定义 |

需要交互两次:

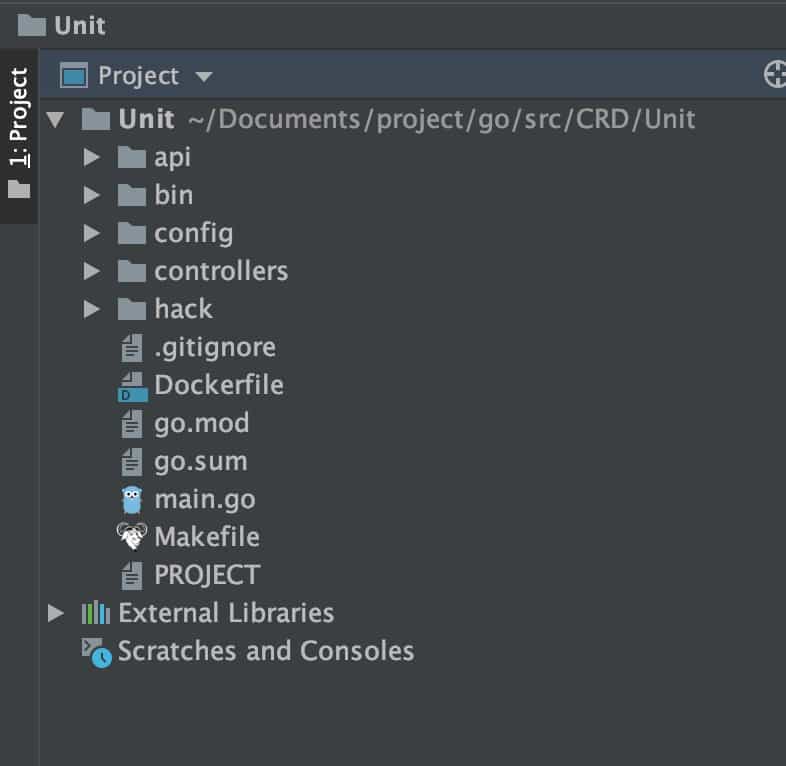

目录创建完毕,使用IDE打开看看:

目录结构

1 | mbp-16in:Unit ywq$ tree |

源码分析

从main.go入手:

1 | func main() { |

main方法里有3个步骤:

- new manager

- register reconciler

- start manager

这些内部的方法,都是分布在依赖的包里面的,不再是在Unit目录下。进去分别来分析一下

New manager

main.go:57

1 | mgr, err := ctrl.NewManager(ctrl.GetConfigOrDie(), ctrl.Options{...} |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/alias.go:101`

1 | NewManager = manager.New |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/manager.go:229`

1 | // New returns a new Manager for creating Controllers. |

可以看到,这些步骤里面,值得继续深入的是NewCache和NewClient

NewCache

`/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/manager.go:246`

1 | // options在这里,展开来去看看NewCache方法 |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/manager.go:351`

1 | func setOptionsDefaults(options Options) Options { |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/cache/cache.go:110`

1 | // New initializes and returns a new Cache. |

可以发现,这里就是根据配置,来生成所需要监测的每种GVK对应的Informer。至于需要监测的这些GVK在哪配置,后面的篇章中会提及。

NewClient

`/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/manager.go:256`

1 | // options在这里,展开来去看看NewClient方法 |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/manager.go:351`

1 | // setOptionsDefaults set default values for Options fields |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/manager.go:320`

1 | func defaultNewClient(cache cache.Cache, config *rest.Config, options client.Options) (client.Client, error) { |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/client/client.go:54`

1 | // 利用scheme来取得给定的资源type所属的GVK。 |

register reconciler

reconciler即controller,命名为reconciler,意为协调器更贴切,控制器的核心逻辑在这里面。

main.go:69

1 | if err = (&controllers.UnitReconciler{ |

==> controllers/unit_controller.go:49

1 | func (r *UnitReconciler) SetupWithManager(mgr ctrl.Manager) error { |

Complete方法是用作生成Builder.

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/builder/controller.go:128`

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/builder/controller.go:134`

1 | // Build builds the Application ControllerManagedBy and returns the Controller it created. |

Builder最主要的是doController()和doWatch()方法,分别来看下。

doController

`/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/builder/controller.go:145`

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/builder/controller.go:213`

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/builder/controller.go:35`

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/controller/controller.go:63`

1 | // New returns a new Controller registered with the Manager. The Manager will ensure that shared Caches have |

可以看出,doController方法是生成Controller,并将其注册进Manager的外层主体进行托管。

其中,Controller结构体实例内包含的字段如下:

1 | c := &controller.Controller{ |

==> controllers/unit_controller.go:40

1 | func (r *UnitReconciler) Reconcile(req ctrl.Request) (ctrl.Result, error) { |

Reconcile()方法在这里,逻辑需要自己实现。后面的篇章中会描述我的需求、设计和代码实例。

doWatch

1 | func (blder *Builder) doWatch() error { |

doWatch()主要干两件事:watch CRD 资源的变更,以及watch CRD 资源的own resouces的变更.watch到变更之后下一步做什么呢?当然是交给handler来处理,来看一下这里第二行代码中生成的handler是做什么的。

`/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/handler/enqueue.go:34`

1 | type EnqueueRequestForObject struct{} |

不出意外,handler会增删查的写请求的对象的NamespacedName,压入workqueue里面,与此同时,在另一头检测workqueue的协调器Reconciler默默地开始运转。

需要准备的注册工作都做完了,下面就要回到main.go中,启动外层托管组件Manager了。

启动Manager

启动Manager分为两步,第一步准备好Cache组件,第二步启动controller组件

启动Cache

main.go:80

1 | if err := mgr.Start(ctrl.SetupSignalHandler()); err != nil { |

上面ctrl.NewManager()方法最终返回的是controllerManager{}对象的指针,来controllerManager里面找一下Start()方法.

`/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/internal.go:403`

1 | func (cm *controllerManager) Start(stop <-chan struct{}) error { |

`/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/internal.go:465`

1 | func (cm *controllerManager) startLeaderElectionRunnables() { |

==> `/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/manager/internal.go:489`

1 |

|

waitForCache的主要作用是启动Cache和等待Cache的首次同步完成。

启动cache的步骤则包括:创建FIFO queue、初始化informer、reflector、LocalStorage cache、index索引等。

启动controller

controller启动之后的工作模式分析之前的controller系列文章已经讲过很多次了,这里再快速回顾一遍。

`/Users/ywq/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.5.0/pkg/internal/controller/controller.go:146`

1 | func (c *Controller) Start(stop <-chan struct{}) error { |

worker内部的最终逻辑回到了Reconcile方法,也即是需要在controllers/unit_controller.go:40中的Reconcile()自定义逻辑的方法,按照自定义的逻辑运行。

总结

如果之前看过内置资源的Controller的源码,对Controller工作方式有了解,那么理解Kubebuilder起来也是轻车熟路。

归纳下来,kubebuilder创建的controller做的事情也是跟文章最上面的流程图一样,只是kubebuilder经过了高度的封装后,便利程度到了仅需要实现Reconcile方法内部的逻辑即可,中间所有的流程都按照标准controller的运行方式替你包揽实现了。

本篇对kubebuilder的核心重点介绍到此结束,下一篇将开始正式介绍Unit CRD的设计思路,例如Unit会管理它的own resource,以及kubebuilder如何来帮助 授权管理own resource、同步Unit与own resource。